Individuals around the world are accessing critical services through digital technology more than ever before. According to Statista, there were 4.66 billion active internet users worldwide as of January 2021. This accounts for almost 60% of the global population. And of this total, 93% of users accessed the internet via mobile devices.

To keep this growing number of users safe and ensure a smooth user experience, organizations from government agencies to private businesses are using biometric technology for the purposes of identity verification and onboarding. In addition to improving remote access to services, this shift also offers increased convenience for customers and organizations alike — particularly in the wake of the COVID-19 pandemic.

To keep this growing number of users safe and ensure a smooth user experience, organizations from government agencies to private businesses are using biometric technology for the purposes of identity verification and onboarding. In addition to improving remote access to services, this shift also offers increased convenience for customers and organizations alike — particularly in the wake of the COVID-19 pandemic.

But as more businesses invest in creating a better digital customer experience, what happens when certain demographic groups of individuals are faced with systemic barriers that have unknowingly been built into the technology?

It’s increasingly clear that biometric systems, such as facial recognition technology, experience high levels of demographic and racial bias. To help you tackle the issue, we discuss the root causes of the bias in these systems, why it’s important to recognize, and how your organization can minimize negative demographic factors for biometric bias in your onboarding process.

Want to learn more about biometric bias? Click here to download Mitek's Biometrics, fairness, and inclusion white paper

What is an automated biometric system?

Biometric systems measure and analyze the physiological or behavioral traits of an individual for the purposes of identity verification and authentication. This is often conducted through biometric authentication using fingerprint and facial recognition technology.

Automated biometric authentication systems use artificial intelligence to complete that level of verification. The system confirms this by comparing the biometric input with the biometric data that’s been previously stored.

To do this, an automated biometric system consists of:

- A capture device. The device through which biometric samples and images are acquired. For example, a camera in a facial recognition system

- A database. This is where biometric information and any other personal data acquired is stored.

- Signal processing algorithms. These are used to estimate the quality of the acquired sample, identify the region of interest (e.g., a face or fingerprint), and extract distinguishing features from it.

- Comparison and decision algorithms. This is used to ascertain the level of similarity of two biometric samples by comparing the data.

There are many advantages to using biometric technology in the digital age, including convenience and high levels of security. However, there are also many disadvantages, including high costs and bias.

In the following section, we’ll cover biometric authentication demographic bias, and the effects of this type of systemic bias.

What is biometric bias?

Biometric bias is when an algorithm is unable to operate in a fair and accurate manner based on the tasks it’s been programmed to conduct. Specifically, the automated recognition of individuals based on their biological and behavioral characteristics.

Biometric algorithms are considered to be biased if there are significant differences in how it operates when interacting with different demographic groups of users. Consequently, certain groups of users are privileged while other groups are disadvantaged.

Who is affected by demographic bias in biometrics?

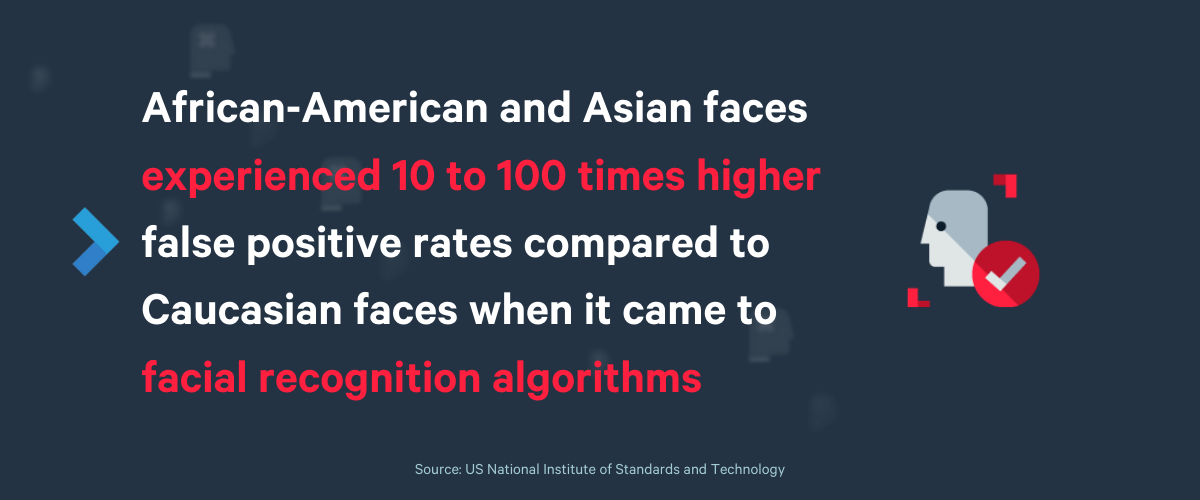

Several studies have demonstrated clear demographic bias in biometric authentication technology. Several studies found that certain demographics experienced low classification accuracy and low biometric performance. The US National Institute of Standards and Technology found that African-American and Asian faces experienced 10 to 100 times higher false positive rates compared to Caucasian faces when it came to face recognition algorithms.

False positives occur when the biometric system incorrectly matches two faces that are different. Female and younger faces also tended to experience higher rates of false negatives. This is where the biometric system fails to match two images of the same person.

Other scientific studies have also confirmed the low biometric performance in female and younger individuals, and that dark-skinned females, in particular, experience lower classification accuracy.

What are the consequences of demographic bias in biometric authentication?

Reducing demographic factors for biometric bias can be challenging, especially if there is a lack of awareness at the onset. However, lack of consistency and accuracy of biometric authentication software can have negative consequences on both the user and the organization.

While certain instances of bias are minor inconveniences, they can also cause serious harm and disadvantages to certain individuals. Demographic and racial bias in biometrics during onboarding processes can also deny customer access to new and essential digital services.

Not only do high error rates increase the potential of fraud, particularly in the case of false positive matches, but they also discriminate against users who may experience false negative matches.

What is the solution to biometric bias?

This Forbes article discusses how the biases in biometric technologies are a result of either bugs in the AI algorithms, or issues with the data included in the learning models. What this means is that artificial intelligence and biometric authentication technology isn’t inherently racist. These are technological issues that can be resolved through improved training and updated technologies. The biggest challenge is trying to omit bias at the development level of these technologies.

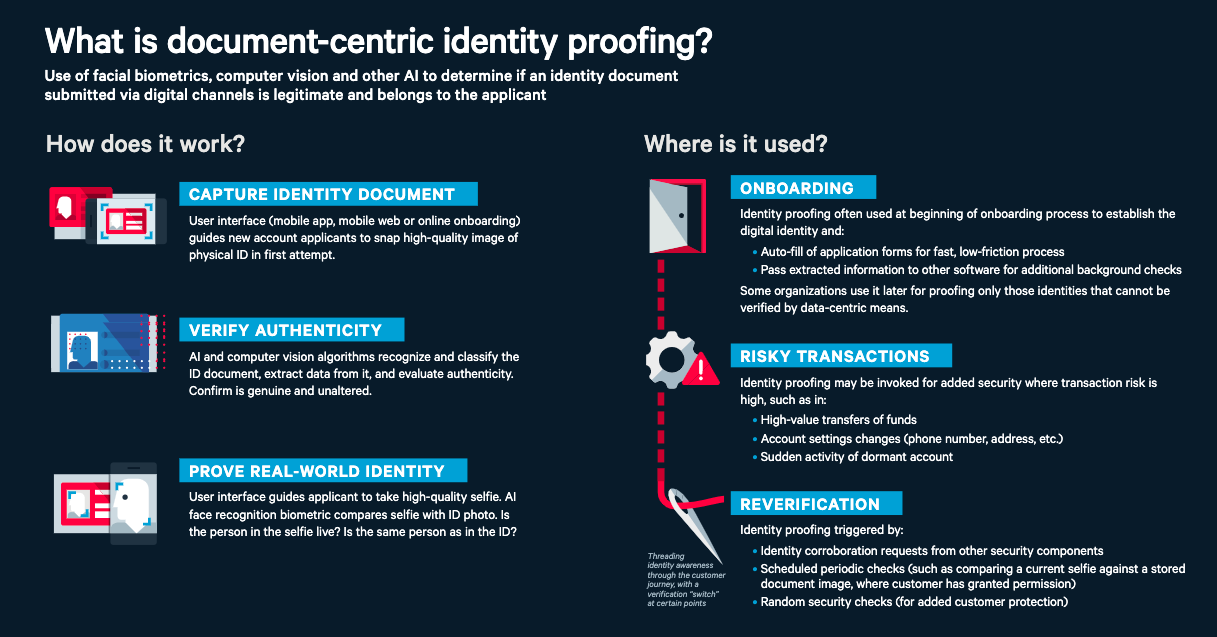

Document-centric identity verification (ie. comparing face identification to a government document) has been shown to be one of the least biased methods of identity proofing.

As an organization, it’s important to know the signs of biometric bias, and how to minimize them in your onboarding process. Two of the top questions you can ask when seeking to minimize demographic biometric bias include:

- Is the algorithm accurate, but also consistent? A tool that is slightly less accurate but more consistent could be more equitable.

- Is the training and testing data aligned with the data it will be analyzing? In order for biometric authentication to be more accurate and consistent, the AI algorithm should be trained using data similar to what it will be analyzing after the system has been implemented.

The future of biometrics

Biometrics will continue to be a growing and integrated part of our lives and interactions with the external world. While there are many discussions around privacy and ethical implications, societies are generally becoming more comfortable with the use of biometrics in the day-to-day.

It’s more important than ever to minimize these unintentional biases as technology continues to evolve at a rapid pace. While there have been massive improvements in biometric technology, such as facial recognition, there is still plenty of work to be done to bring bias levels down.

Moving forward

Biometric bias can result in unintentional discrimination and can limit essential services to those on the receiving end. In a world anchored by digital technology, it’s clear that biometric authentication onboarding should not be a barrier for individuals accessing services.

Fortunately, minimizing demographic bias is a high priority for many businesses. Gartner predicted that 2022, 80% of RFPs for document-centric identity proofing will have clear requirements for minimizing demographic bias.

By embracing document-centric identity solutions and understanding the causes and consequences of demographic bias, it will be much easier to minimize unintentional discrimination during your onboarding processes and create unnecessary aggravation for your customers.