As companies increasingly rely on artificial intelligence for identity verification and fraud prevention, they face new kinds of threats – deepfake videos bypassing facial recognition, synthetic voices fooling biometric checks, and sophisticated replay or injection attacks. Traditional cybersecurity testing isn't sufficient because attackers now target the AI models themselves, exploiting their vulnerabilities directly rather than just hacking software or infrastructure.

To address these evolving risks, modern AI-driven businesses need specialized teams capable of continuously simulating advanced, AI-specific attacks against their own systems. At Mitek, we’ve created exactly that – a dedicated team of expert ethical hackers focused solely on challenging our identity-verification solutions. Known internally as our "Purple Team," this group proactively uncovers vulnerabilities in our facial, voice, and document liveness modules by simulating real-world, AI-based attacks.

Born from an internal ethical hacking competition that attracted some of the most creative fraud simulations we’ve ever seen, our Purple Team includes professional AI vulnerability hunters. Unlike traditional cybersecurity teams, they do not directly implement defenses or respond to external threats. Instead, their sole mission is to aggressively test our AI models using cutting-edge adversarial techniques – including hyper-realistic 3D masks, GAN-generated deepfake imagery, voice cloning technologies, and document spoofing techniques. Their findings immediately feed back into product development, enabling rapid patches and continuous improvement.

The effectiveness of this strategy has been recognized externally: Mitek’s Purple Team recently won a prestigious Gold Globee Award in Cybersecurity for their innovative contributions. Their rigorous testing approach has helped position Mitek’s AI fraud detection technologies among industry leaders in independent evaluations.

Beyond traditional SDLC: Expanding security into AI model governance

The software development life cycle (SDLC) for AI products differs from traditional software in a few key ways, demanding an expanded security focus. In conventional software, security might center on code review, penetration testing, and patching known Common Vulnerabilities and Exposures (CVE). But in an AI-specific lifecycle, many risks stem from data and model behavior rather than explicit code flaws. Issues like model bias, concept drift, or adversarial vulnerability require continual oversight even after deployment. This is where Purple Teams shine – they bring rigorous security practices into each stage of the AI model lifecycle as part of model governance.

Model governance refers to the frameworks and processes that ensure an AI model is reliable, secure, fair, and compliant throughout its life. Purple Teams contribute by performing independent, adversarial testing and validation as a core governance function. In practice, that means the Purple Team (or an equivalent independent validation team) acts separately from the data scientists who developed the model, bringing an objective attacker’s perspective. By validating models against criteria the original developers might overlook, they catch vulnerabilities and biases early. Independent validation datasets are often employed – these are test data sets curated specifically to challenge the model (for example, containing adversarial inputs or edge cases not seen in training) – to see how the AI behaves under worst-case conditions.

Leading AI risk standards reinforce this approach. The new ISO/IEC 23894:2023 standard (AI risk management guidance) urges organizations to integrate risk management throughout the AI development lifecycle, highlighting AI-specific risk sources and the need for tailored controls1, 2. Similarly, the NIST AI Risk Management Framework (AI RMF), released in 2023, emphasizes testing and validation to ensure AI systems are “secure and resilient against threats”3. Under NIST’s “Measure” and “Manage” functions, organizations are encouraged to assess AI risks continuously and respond to them – which includes techniques like red teaming models and stress-testing with adversarial examples4. In fact, robust model validation often “employ[s]…testing against adversarial examples” as a best practice5. By doing this kind of testing, Purple Teams directly support compliance with emerging AI governance frameworks, from NIST to the EU AI Act’s anticipated risk requirements6.

In practice, a Purple Team involved in model governance might perform tasks such as: conducting attack tree analyses to map out how an AI system could be exploited, running controlled adversarial attacks to probe the model’s weak spots, verifying that mitigation measures (like input filters or anomaly detectors) work as intended, and ensuring documentation and risk reports are updated. They act as an independent “third line of defense” for AI models – analogous to how financial institutions require independent model validation for high-risk algorithms. This governance role extends the traditional DevSecOps pipeline into a continuous AI model assurance pipeline, where models are not only tested before go-live but regularly challenged throughout their operation by folks who think like attackers.

Adversarial robustness: Battling AI-specific threats

Why are Purple Teams so crucial for AI? One major reason is the array of AI-specific threats that traditional security teams might not be prepared to handle. Let’s look at some of these threats – and how Purple Teams help counter them:

-

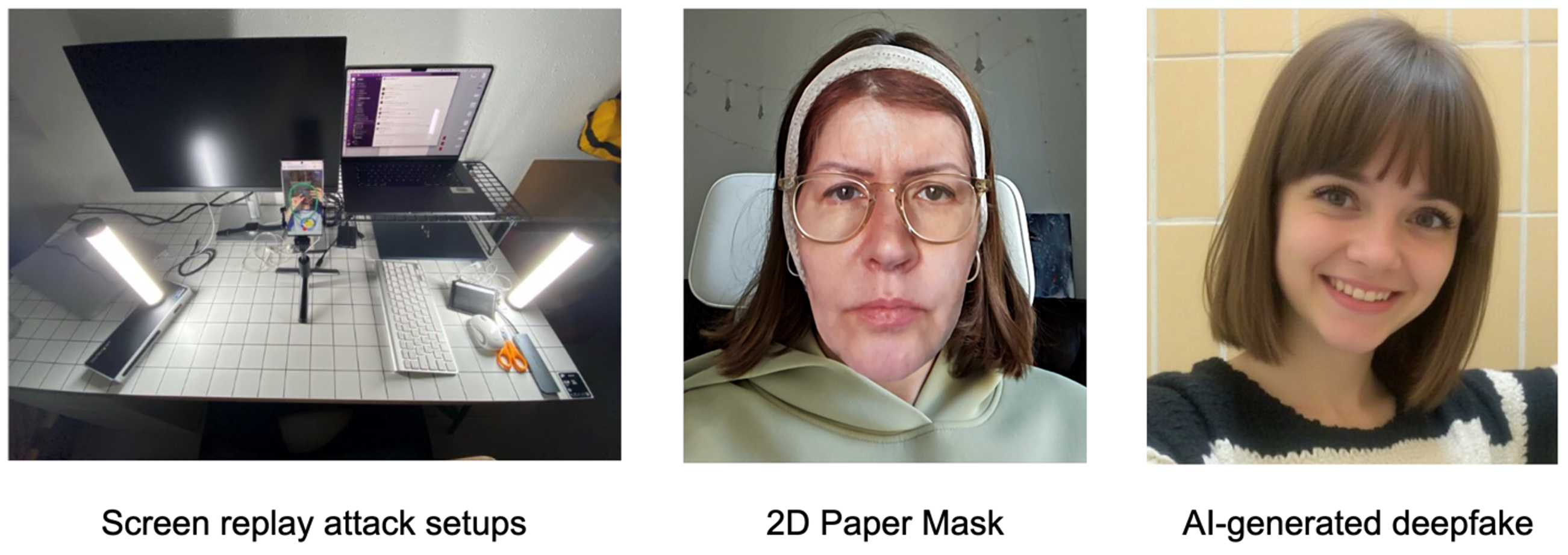

Facial deepfakes and presentation attacks: AI-generated fake images, videos, or voices can defeat biometric checks or spread disinformation. For instance, face recognition or liveness detection systems could be fooled by a highly convincing deepfake video. Purple Team members specializing in computer vision attacks will continually test the company’s models against the latest deepfake techniques. At Mitek, this means using face generators and deepfake engines to ensure the verification AI can detect imposters. By simulating deepfake attacks in-house, Purple Teams help build resilience so that fraudsters’ synthetic identities are caught in real deployments. Picture below illustrates some of the attacks on the facial liveness engine including the setup for screen replay attacks and examples of a realistic mask and generated deepfake Purple Team uses for testing.

-

Document liveness detection testing: Modern fraudsters frequently leverage Photoshop templates, online fraudulent services, and traditional cut-and-paste methods to forge identity documents. Purple Teams proactively simulate these tactics by generating counterfeit documents using readily available templates, specialized printing setups (such as inkjet and laser printers), and a variety of paper and lamination techniques. Additionally, screen replay attacks – displaying scanned or digitally altered documents via screens – are thoroughly tested to ensure robustness. By rigorously evaluating document liveness detection against the same sophisticated techniques employed by fraudsters, Purple Teams significantly strengthen the system's capability to reliably distinguish authentic documents from high-quality forgeries. The picture below demonstrates the setup for document liveness testing as well as the process of portrait substitution of the ID document.

-

Digital injection attack simulation in KYC: Fraudsters increasingly exploit digital injection attacks to bypass KYC processes by manipulating or injecting fake digital content into data streams during verification. Purple Teams simulate these attacks by deploying sophisticated virtual camera setups, OS-level API hooking, and emulator-based spoofing to inject pre-recorded or synthesized content directly into verification channels. By rigorously testing KYC systems against such digital injection scenarios, the Purple Team ensures models reliably detect and mitigate attempts to digitally manipulate or fabricate biometric and document data in real-world deployments. In practice, they might develop attack trees enumerating possible evasion or manipulation techniques and then systematically execute those attacks.

The key advantage of Purple Teams in all these scenarios is their agility and focus. Because they think like attackers but operate within the organization, they can swiftly pivot to new threats. Today it might be deepfakes, tomorrow a novel prompt injection or a clever data poisoning method – a Purple Team keeps an AI company ahead of the curve.

Building a Purple Team early + key takeaways

Purple Teams are proving to be the unsung heroes of secure AI deployment. They expand the horizons of traditional cybersecurity into the domain of AI model risk, bringing continuous adversarial testing, collaborative defense, and governance oversight to the AI lifecycle. Mitek’s experience – from internal ethical hacking efforts to an award-winning Purple Team – shows that investing in such capabilities pays off in resilient AI products and industry recognition, but it was a journey that required significant commitment because it is both a cultural and operational shift. Reflecting on that journey, there are a number of things that our organization did really well:

-

Started early: We didn’t wait for a major incident to incorporate security into our AI projects. We set up a Purple Team early in our model’s lifecycle. Our small team with a dual attack/defense mission was able to identify issues in model architecture or data before they become entrenched problems.

-

We hired and trained hybrid talent: We looked for team members with offensive security skills and AI/ML knowledge – and in some instances paired up our data scientists with seasoned ethical hackers. The combination of expertise is key. We encouraged training in adversarial ML techniques for security staff, and conversely, taught AI engineers about security mindset. This cross-pollination builds a true Purple capability.

-

Integrated into model governance: Purple Team became a formal part of our AI governance framework. They performed independent validation of models (remember, no one should mark their own homework when it comes to AI). We learned to leverage their findings in go/no-go decisions for model deployment and gave them access to resources to create independent test datasets and to run attack simulations freely, with support from leadership that prioritized long-term trust over short-term model accuracy gains.

-

Continuously evolved and iterated: Purple Team exercises became a regular cadence, not a one-off. Threats in AI can rapidly evolve – what secures your computer vision model today might not suffice against next year’s deepfake advancements. So we established a cycle of testing, learning, and improving. For instance, after each major model update (or new AI feature launch), we tasked the Purple Team with a fresh red-teaming sprint. Then had them work with the Blue side to patch and improve, feeding those changes back into development for faster, hardened releases. This iterative loop ensured security kept pace with innovation.

-

We fostered a culture of security in AI teams: Lastly, we worked to empower our Purple Team to act as ambassadors of AI security within our organization. Their insights helped educate the rest of the company – developers, product managers, leadership – about AI risks and defenses. When data scientists see the creative attacks the Purple Team comes up with, it can inspire them to build models with security in mind from the start. AI security should be a shared responsibility, with Purple Team findings driving updates in training, tooling, and policies across the AI pipeline.

By establishing a Purple Team early and giving them the mandate to relentlessly probe and protect your AI, you prepare your company for the realities of AI risk. In a world of deepfakes, synthetic identities, and clever prompt hacks, this fusion of red and blue is the best bet to stay one step ahead. AI-driven innovation doesn’t have to come at the cost of security – with Purple Teams on the job, you can – as we have – deliver cutting-edge AI products that are robust, trustworthy, and well-defended against the threats of today and tomorrow.

References:

1, 2: ISO/IEC 23894 – A new standard for risk management of AI - AI Standards Hub, ISO/IEC 23894 – A new standard for risk management of AI - AI Standards Hub

3: NIST AI Risk Management Framework (AI RMF) - Palo Alto Networks

4: NIST AI Risk Management Framework (AI RMF) - Palo Alto Networks

5: NIST AI Risk Management Framework (AI RMF) - Palo Alto Networks

6: Generative AI red teaming: Tips and techniques for putting LLMs to the test | CSO Online

Learn how Mitek is tackling generative AI fraud head-on

See what secure AI innovation really looks like.